Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

Alaya AI is an innovative artificial intelligence platform. It’s designed to enhance user experiences with smart automation. In the digital age, businesses constantly seek advanced technologies to streamline operations and…

Best AI stocks include industry leaders like NVIDIA, Alphabet, and Microsoft, known for their robust AI initiatives. Investors consider these stocks promising due to their strong AI-driven product portfolios. Artificial…

Character AI NSFW refers to the use of artificial intelligence to generate adult-themed content. These algorithms can produce text, images, or animations unsuitable for work (NSFW). Creating NSFW content through…

Canvas Artificial Intelligence (AI) refers to the advanced computational systems embedded within the Canvas Learning Management System (LMS). These AI capabilities enhance the user experience by automating functions and providing…

To create AI-generated hentai, use software with deep learning capabilities. A Ai Hentai generator designs custom imagery based on algorithmic interpretations. Exploring the forefront of AI technology, artists and developers…

Explore the cutting-edge ‘Money Methods with AI 2024‘, a comprehensive guide to leveraging artificial intelligence for wealth generation. Dive into innovative strategies for automation, content creation, and data analysis to…

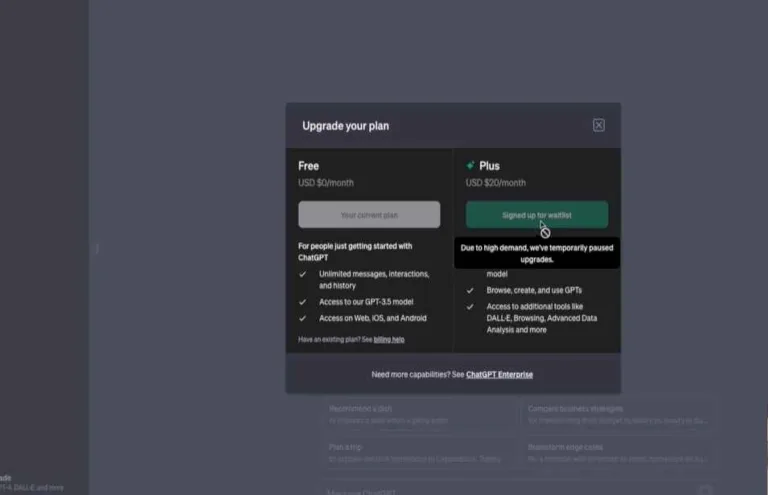

If you want to know about How to Access ChatGPT 4, visit OpenAI’s website or use a platform that integrates the model. Sign up for access if required, or log…

AI revolutionizes the media and entertainment industry by enhancing content personalization and streamlining production processes. It offers predictive analytics and audience insights about the Role of AI in Media And…

Explore how AI in the Energy Sector is revolutionizing energy management, enhancing operational efficiency, and paving the way for sustainable solutions. Discover the latest innovations and trends in artificial intelligence…

In an era where digital deception is escalating, McAfee has introduced a groundbreaking AI-Based Deepfake Audio Detection technology, marking a significant advancement in cybersecurity. This innovative solution is tailored to…